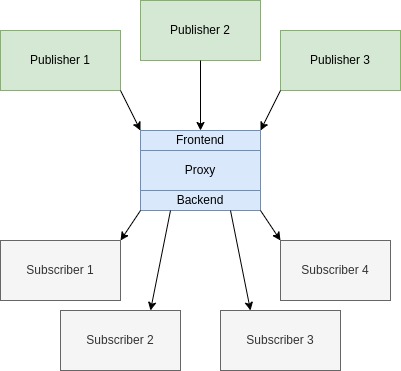

A fully asynchronous publish-subscribe mechanism whereby a forwarder (proxy) relays messages from multiple publishers to multiple subscribers. More...

Classes | |

| class | UMPS::Messaging::XPublisherXSubscriber::Proxy |

| A ZeroMQ proxy to be used in the XPUB/XSUB pattern. More... | |

| class | UMPS::Messaging::XPublisherXSubscriber::ProxyOptions |

| Options for initializing the proxy. More... | |

| class | UMPS::Messaging::XPublisherXSubscriber::Publisher |

| A ZeroMQ publisher. More... | |

| class | UMPS::Messaging::XPublisherXSubscriber::PublisherOptions |

| Options for initializing the publisher in the XPUB/XSUB pattern. More... | |

| class | UMPS::Messaging::XPublisherXSubscriber::Subscriber |

| The subscriber in an extended publisher/subscriber messaging pattern. More... | |

| class | UMPS::Messaging::XPublisherXSubscriber::SubscriberOptions |

| Options for initializing the subscriber in the extended PUB/SUB pattern. More... | |

A fully asynchronous publish-subscribe mechanism whereby a forwarder (proxy) relays messages from multiple publishers to multiple subscribers.

Despite its simplicitly the Publisher-Subscriber is not sufficient in practice. For real applications, where we may have multiple data sources (e.g., UUSS field instruments telemetered to import boxes and data streams from other networks served through IRIS). Clearly, we will have multiple data publishers. Of course, it is possible to perpetually add a subscriber for each data feed in our applications but this will become cumbersome and difficult to maintain; particularly when a data feed is dropped. What we really want is one-stop shopping. If a subscriber connects to a particular endpoint then it will be able to receive every message in the data feed, i.e., the subscriber need not know the details of every publisher. For this reason, we introduce the extended publisher-subscriber or xPub-xSub. As a rule, even if I were considering a single producer and single consumer application, I would still implement it as a xPub-xSub pattern because experience dictates that even in well-planned applications more publishers always manage to materialize.

The technology required to make this happen is to introduce a middleman or proxy. The proxy provides a stable endpoint to which publishers send data and subscribers retrieve data. In the parlence of ZeroMQ data goes into the proxy's frontend and data goes out the proxy's backend.

There really is not much more to this pattern than what you have already seen in the An Example. In principle, data is again being sent from producers to subscribers. The main differences are that instead of one publisher we will have three, the number of subscribers is increased from three to four, and there is a proxy.

The publisher is like the previous pub-sub publisher. However, it connects to the proxy. In the verbiage of ZeroMQ we bind to stable endpoints. Hence, UMPS views content producers and subscribers as ephemeral and proxies as long-lifetime, stable endpoints. Consequently, your modules will always connect to the uOperator.

The subscriber is exactly like the pub-sub subscriber. In fact, you can use a pub-sub subscriber to connect to the proxy's backend and things will work.

The proxy is a new concept. What this thread does is simply take data from the input port (frontend) and stick it on output port (backend). Its a worker whose job is to simply take items from one conveyer belt and put them on an adjacent conveyer belt. Surprisingly, this remarkably simple mechanism seems sufficient for many broadcast applications.

Notice that the proxy is running as another thread that must be started and stopped. This is because the proxy is supposed to stay up indefinitely. In general, you will not have to explicitly think about this since the uOperator will be responsible for keeping these proxies alive and open. All you will have to do is connect via the extended publisher or extended subscriber.

Lastly, the driver code that launches this example. We create seven threads - three publishers and four subscribers. The subscribers connect first then will block until a message is received. Then the publishers are started. Again, this is a brittle code since we are specifying a priori the number of messages. Therefore, the producer code must contend with the slow joiner problem

In closing, it should be clear that the xPub-xSub paradigm requires marginally more effort than its naive publisher-subscriber counterpart. However, do not undererestimate the xPub-xSub. At UUSS this is the mechanism by which we move all of our data packets, all of our probability packets, and STA/LTA packets, as well as all of our picks, events, and module heartbeats. And the computational cost on the uOperator hub is small. Long story short, when it comes to solving the broadcast problem, you will be hard-pressed to find a more flexible and scalable approach than the xPub-xSub.