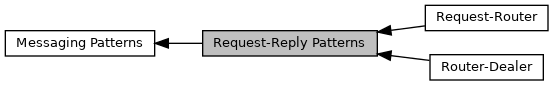

Modules | |

| Router-Dealer | |

| The router-dealer combination allows for asynchronous clients interacting with asynchronous servers. | |

| Request-Router | |

| The request-router combination allows for an asynchronous server that can be utilized by multiple clients. | |

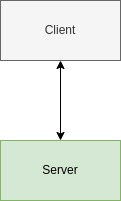

The request-reply mechanism is as its name implies. There will be a client that makes a request to a server. Upon receipt of the request message, the server will perform the desired processing and return the result as a response message. Again, while conceptually simple, it will soon be clear that this pattern can be scaled so that the backend can be buttressed by an arbitrary number of servers so as to meet the demand of an arbitrary number of clients.

It may not be immediately obvious, but the request-reply mechanism is one of UMPS's major improvements over many existing seismic processing systems. This is because existing systems typically use a conveyer belt approach to processing. In this strategy, the products of one module are broadcast in some way (e.g., a shared memory ring or a message bus) to a downstream module. This strategy is effective when all the processing to be performed is on a single server. In this case, the implicit processing assumption is that a single server can meet the computational demands of all algorithms in aggregrate. Things get messy however when multiple, physical servers are needed to keep up with the requisite processing.

The challenge with a pub-sub strategy for distributed computing is the logistical hurdle of deciding which instance of a processing algorithm takes action upon receipt of a message so as not to duplicate work. To the best of my knowledge, the USGS NEIC appears to be pursuing a message-centric solution that leverages Kafka. In short, Kafka is a database so a processing algorithm can lock a message thereby inhibiting a separate, parallel processing algorithm from performing the same computation for the same data.

The approach pursued by UMPS is to allow for the creation of RESTful APIs. Processing algorithms are now turned into stateless services that perform the desired calculation to their best of their ability then return a result. The cloud-based implementation would be a serverless function. The upshot is that meeting the computational demand amounts to continually adding hardware to drive an instance of a backend service. The downside is that each service requires the user implement a well-defined API.

Here, we make clear the idea behind the request-reply pattern. For reasons that will become clear, this pattern is not implemented in UMPS.

As the figure indicates, this pattern is extremely straightforward. A client connects to a server; presumably the server is the stable endpoint in the architecture. The server idly waits for a message from the client. Upon receipt of that message, the server performs the requisite processing. The response is then returned to the waiting client.

While straightforward, this implementation is unworkable in practice. This is because the client and server communication is synchronous. For example, if the service takes too long then the client may timeout. The impatient client may then submit a second request. This will break the messaging. Consequently, I have not even bothered implementing this paradigm in UMPS.